Table of Contents

1. Introduction

Few years back I observed data center under fire. Service for customers was interrupted. It took several days to extinguish a fire and definitely this is scenario you should be prepared for.

What if you have 3 data centers in 3 different places on the globe but somehow primary and replica shard are in same data center that get destroyed. It will make your data lost. Time to prepare for that.

In this knowledge article I am going to explain you how to set Elasticsearch to keep shards allocated according to your location so you are safe from situation when local disaster will become global.

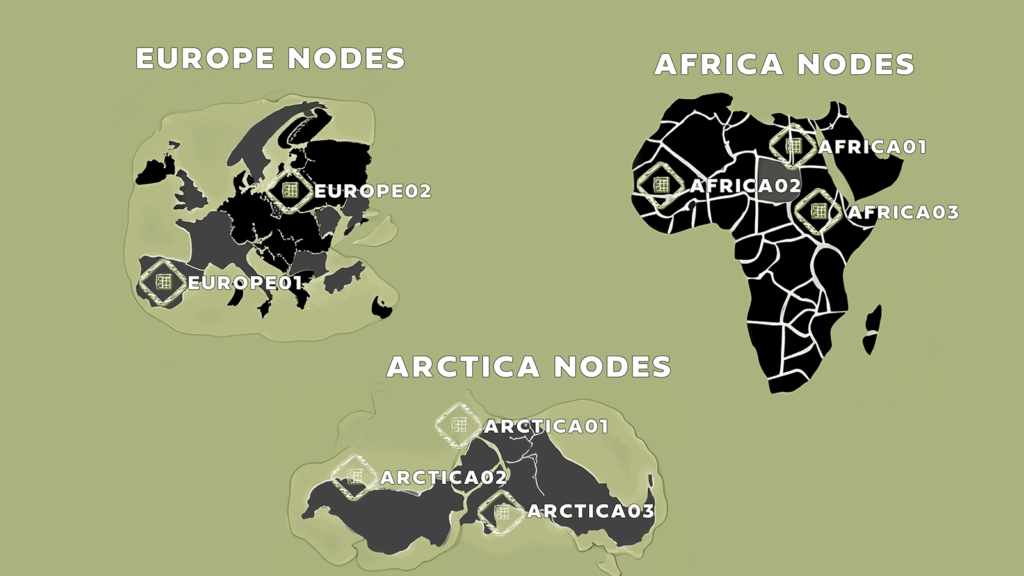

Firstly I want to describe you how to start Elasticsearch cluster in the simulation that will mimic 8 nodes located on 3 continents, you will start it and load sample document. Then you will make sure primary and replica shard are in Europe, once done you will kill containers that host ELK europe nodes and therefore simulate disaster. Finally you will start cluster again with node attributes and allocation awareness to fix issue.

Let’s start

2. Start Elasticsearch cluster

First time cluster will be without node attributes to simulate disaster later on. Please notice that I am using 2g memory for container, somehow Elasticsearch will die if you try join another node to it when having default memory settings. It can be more that 2 gigabytes by the way.

docker run --rm \

--name europe01 \

--net elknodes \

-d \

-e ES_JAVA_OPTS="-Xms2g -Xmx2g" \

-e node.name="europe01" \

-p 9200:9200 \

docker.elastic.co/elasticsearch/elasticsearch:8.11.1

Set password for elastic user:

docker exec -it europe01 bash -c "(mkfifo pipe1); ( (elasticsearch-reset-password -u elastic -i < pipe1) & ( echo $'y\n123456\n123456' > pipe1) );sleep 5;rm pipe1"

Save token under variable, it will make it available for other nodes startup commands:

token=`docker exec -it europe01 elasticsearch-create-enrollment-token -s node | tr -d '\r\n'`

Seven additional nodes will be started representing 2nd Europe node and 3 nodes per Africa and Arctica:

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="europe02" \

--name europe02 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="africa01" \

-p 9201:9200 \

--name africa01 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="africa02" \

--name africa02 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="africa03" \

--name africa03 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="arctica01" \

--name arctica01 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="arctica02" \

--name arctica02 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="arctica03" \

--name arctica03 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

Make sure all nodes formed the cluster:

curl -k -u elastic:123456 -XGET "https://localhost:9200/_cat/nodes?pretty&v"

example answer:

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

172.26.0.5 40 74 18 5.22 2.13 0.78 cdfhilmrstw - africa02

172.26.0.4 42 75 25 5.22 2.13 0.78 cdfhilmrstw - africa01

172.26.0.2 33 73 30 5.22 2.13 0.78 cdfhilmrstw * europe01

172.26.0.7 42 73 23 5.22 2.13 0.78 cdfhilmrstw - arctica01

172.26.0.9 46 74 20 5.22 2.13 0.78 cdfhilmrstw - arctica03

172.26.0.6 42 74 23 5.22 2.13 0.78 cdfhilmrstw - africa03

172.26.0.3 43 73 24 5.22 2.13 0.78 cdfhilmrstw - europe02

172.26.0.8 41 73 19 5.22 2.13 0.78 cdfhilmrstw - arctica02

3. Load test data

Please run below command to load sample document

curl -k -u elastic:123456 -XPUT "https://localhost:9200/customerdata" \

-H 'content-type: application/json' -d'

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 1

},

"mappings": {

"properties": {

"customer_name": {

"type": "keyword"

}

}

}

}'

curl -k -u elastic:123456 -XPUT "https://localhost:9200/customerdata/_doc/1" \

-H 'content-type: application/json' -d'

{

"customer_name": "Most Important"

}'

Run search query to see if data is loaded properly:

curl -k -u elastic:123456 -XGET "https://localhost:9200/customerdata/_search"

example response:

{

"took": 65,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 1.0,

"hits": [

{

"_index": "customerdata",

"_id": "1",

"_score": 1.0,

"_source": {

"customer_name": "Most Important"

}

}

]

}

}

3. Simulate disaster

3.1. Verify shard allocation

Check current shard allocation to see where Elasticsearch assign buckets.

curl -k -u elastic:123456 -XGET "https://localhost:9200/_cat/shards?v&s=state:asc&index=customerdata"

Example response:

index shard prirep state docs store dataset ip node

customerdata 0 p STARTED 1 4kb 4kb 172.26.0.8 arctica02

customerdata 0 r STARTED 1 4kb 4kb 172.26.0.5 africa02

Look at response and make actions to have both shards to be on Europe nodes like europe01 & europe02. This is simulation of possible assignment because right now it is absolutely fine for Elasticsearch to have shards in one location.

3.2. Move shards to simulate Europe hosting all data

Move shard from africa02 to europe02:

curl -k -u elastic:123456 -XPOST "https://localhost:9200/_cluster/reroute" \

-H 'content-type: application/json' -d'

{

"commands": [

{

"move": {

"index": "customerdata",

"shard": 0,

"from_node": "africa02",

"to_node": "europe02"

}

}

]

}'

And move shard from arctica02 to europe01:

curl -k -u elastic:123456 -XPOST "https://localhost:9200/_cluster/reroute" \

-H 'content-type: application/json' -d'

{

"commands": [

{

"move": {

"index": "customerdata",

"shard": 0,

"from_node": "arctica02",

"to_node": "europe01"

}

}

]

}'

Now check again shards allocation to be sure they were moved:

curl -k -u elastic:123456 -XGET "https://localhost:9200/_cat/shards?v&s=state:asc&index=customerdata"

You should see similar result:

index shard prirep state docs store dataset ip node

customerdata 0 p STARTED 1 4.1kb 4.1kb 172.26.0.2 europe01

customerdata 0 r STARTED 1 4.1kb 4.1kb 172.26.0.3 europe02

3.3. Stop containers located in Europe

Run kill commands :

docker kill europe01

docker kill europe02

3.4. Verify if you can still search your data

Once done check if you can search your data

curl -k -u elastic:123456 -XGET "https://localhost:9201/customerdata/_search"

example response:

{

"error": {

"root_cause": [

{

"type": "no_shard_available_action_exception",

"reason": null

}

],

"type": "search_phase_execution_exception",

"reason": "all shards failed",

"phase": "query",

"grouped": true,

"failed_shards": [

{

"shard": 0,

"index": "customerdata",

"node": null,

"reason": {

"type": "no_shard_available_action_exception",

"reason": null

}

}

]

},

"status": 503

}

Look, you lost your data.

4. Shard allocation awareness

To make sure that Europe nodes will not host both primary and replica shard you can introduce node attributes and enable shard allocation awareness for cluster routing.

4.1. Start first node with allocation awareness

docker run --rm \

--name europe01 \

--net elknodes \

-d \

-e ES_JAVA_OPTS="-Xms2g -Xmx2g" \

-e node.name="europe01" \

-p 9200:9200 \

-e node.attr.continent="europe" \

-e cluster.routing.allocation.awareness.attributes="continent" \

docker.elastic.co/elasticsearch/elasticsearch:8.11.1

again reset password for elastic user:

docker exec -it europe01 bash -c "(mkfifo pipe1); ( (elasticsearch-reset-password -u elastic -i < pipe1) & ( echo $'y\n123456\n123456' > pipe1) );sleep 5;rm pipe1"

and obtain token value

token=`docker exec -it europe01 elasticsearch-create-enrollment-token -s node | tr -d '\r\n'`

4.2. Run rest of the nodes

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="europe02" \

-e node.attr.continent="europe" \

-e cluster.routing.allocation.awareness.attributes="continent" \

--name europe02 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="africa01" \

-e node.attr.continent="africa" \

-e cluster.routing.allocation.awareness.attributes="continent" \

-p 9201:9200 \

--name africa01 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="africa02" \

-e node.attr.continent="africa" \

-e cluster.routing.allocation.awareness.attributes="continent" \

--name africa02 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="africa03" \

-e node.attr.continent="africa" \

-e cluster.routing.allocation.awareness.attributes="continent" \

--name africa03 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="arctica01" \

-e node.attr.continent="arctica" \

-e cluster.routing.allocation.awareness.attributes="continent" \

--name arctica01 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="arctica02" \

-e node.attr.continent="arctica" \

-e cluster.routing.allocation.awareness.attributes="continent" \

--name arctica02 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

docker run --rm \

-e ENROLLMENT_TOKEN="$token" \

-e node.name="arctica03" \

-e node.attr.continent="arctica" \

-e cluster.routing.allocation.awareness.attributes="continent" \

--name arctica03 \

--net elknodes \

-d \

-m 2GB docker.elastic.co/elasticsearch/elasticsearch:8.11.1

4.3. Try to relocate shards to be in Europe

Repeat steps 3.1. and 3.2. and you should get an error now that

[NO

(there are [2] copies of this shard and [3] values for attribute [continent] ([africa, arctica, europe]

from nodes in the cluster and no forced awareness)

so there may be at most [1] copies of this shard allocated to nodes with each value,

but (including this copy) there would be [2] copies allocated to nodes with [node.attr.continent: europe])]

that comes from response

{

"error": {

"root_cause": [

{

"type": "illegal_argument_exception",

"reason": "[move_allocation] can't move 0, from {arctica01}{PSixX7NgRVizKsTkhrY6og}{4nnOC5c5R4OpxmVdhAJj6g}{arctica01}{172.26.0.7}{172.26.0.7:9300}{cdfhilmrstw}{8.11.1}{7000099-8500003}{continent=arctica, transform.config_version=10.0.0, xpack.installed=true, ml.allocated_processors=10, ml.max_jvm_size=1073741824, ml.config_version=11.0.0, ml.machine_memory=2147483648, ml.allocated_processors_double=10.0}, to {europe02}{Lyp_rRIGQ6e4saG21vpb9A}{T5-Vyr5sR-6rxWtGZiCJmA}{europe02}{172.26.0.3}{172.26.0.3:9300}{cdfhilmrstw}{8.11.1}{7000099-8500003}{continent=europe, xpack.installed=true, transform.config_version=10.0.0, ml.allocated_processors_double=10.0, ml.machine_memory=2147483648, ml.config_version=11.0.0, ml.max_jvm_size=1073741824, ml.allocated_processors=10}, since its not allowed, reason: [YES(shard has no previous failures)][YES(primary shard for this replica is already active)][YES(explicitly ignoring any disabling of allocation due to manual allocation commands via the reroute API)][YES(can allocate replica shard to a node with version [8.11.1] since this is equal-or-newer than the primary version [8.11.1])][YES(the shard is not being snapshotted)][YES(ignored as shard is not being recovered from a snapshot)][YES(no nodes are shutting down)][YES(there are no ongoing node replacements)][YES(node passes include/exclude/require filters)][YES(this node does not hold a copy of this shard)][YES(enough disk for shard on node, free: [21.9gb], used: [78.5%], shard size: [4kb], free after allocating shard: [21.9gb])][YES(below shard recovery limit of outgoing: [0 < 2] incoming: [0 < 2])][YES(total shard limits are disabled: [index: -1, cluster: -1] <= 0)][NO(there are [2] copies of this shard and [3] values for attribute [continent] ([africa, arctica, europe] from nodes in the cluster and no forced awareness) so there may be at most [1] copies of this shard allocated to nodes with each value, but (including this copy) there would be [2] copies allocated to nodes with [node.attr.continent: europe])][YES(index has a preference for tiers [data_content] and node has tier [data_content])][YES(shard is not a follower and is not under the purview of this decider)][YES(decider only applicable for indices backed by searchable snapshots)][YES(this decider only applies to indices backed by searchable snapshots)][YES(decider only applicable for indices backed by searchable snapshots)][YES(this node's data roles are not exactly [data_frozen] so it is not a dedicated frozen node)][YES(decider only applicable for indices backed by archive functionality)]"

}

],

"type": "illegal_argument_exception",

"reason": "[move_allocation] can't move 0, from {arctica01}{PSixX7NgRVizKsTkhrY6og}{4nnOC5c5R4OpxmVdhAJj6g}{arctica01}{172.26.0.7}{172.26.0.7:9300}{cdfhilmrstw}{8.11.1}{7000099-8500003}{continent=arctica, transform.config_version=10.0.0, xpack.installed=true, ml.allocated_processors=10, ml.max_jvm_size=1073741824, ml.config_version=11.0.0, ml.machine_memory=2147483648, ml.allocated_processors_double=10.0}, to {europe02}{Lyp_rRIGQ6e4saG21vpb9A}{T5-Vyr5sR-6rxWtGZiCJmA}{europe02}{172.26.0.3}{172.26.0.3:9300}{cdfhilmrstw}{8.11.1}{7000099-8500003}{continent=europe, xpack.installed=true, transform.config_version=10.0.0, ml.allocated_processors_double=10.0, ml.machine_memory=2147483648, ml.config_version=11.0.0, ml.max_jvm_size=1073741824, ml.allocated_processors=10}, since its not allowed, reason: [YES(shard has no previous failures)][YES(primary shard for this replica is already active)][YES(explicitly ignoring any disabling of allocation due to manual allocation commands via the reroute API)][YES(can allocate replica shard to a node with version [8.11.1] since this is equal-or-newer than the primary version [8.11.1])][YES(the shard is not being snapshotted)][YES(ignored as shard is not being recovered from a snapshot)][YES(no nodes are shutting down)][YES(there are no ongoing node replacements)][YES(node passes include/exclude/require filters)][YES(this node does not hold a copy of this shard)][YES(enough disk for shard on node, free: [21.9gb], used: [78.5%], shard size: [4kb], free after allocating shard: [21.9gb])][YES(below shard recovery limit of outgoing: [0 < 2] incoming: [0 < 2])][YES(total shard limits are disabled: [index: -1, cluster: -1] <= 0)][NO(there are [2] copies of this shard and [3] values for attribute [continent] ([africa, arctica, europe] from nodes in the cluster and no forced awareness) so there may be at most [1] copies of this shard allocated to nodes with each value, but (including this copy) there would be [2] copies allocated to nodes with [node.attr.continent: europe])][YES(index has a preference for tiers [data_content] and node has tier [data_content])][YES(shard is not a follower and is not under the purview of this decider)][YES(decider only applicable for indices backed by searchable snapshots)][YES(this decider only applies to indices backed by searchable snapshots)][YES(decider only applicable for indices backed by searchable snapshots)][YES(this node's data roles are not exactly [data_frozen] so it is not a dedicated frozen node)][YES(decider only applicable for indices backed by archive functionality)]"

},

"status": 400

}

5. Conclusion

You simulate disaster and then you fix that issue by applying shard allocation awareness. Elasticsearch will not let you save data into one continent under primary and replica shard. This cause data be safe from local disaster consequences.