Table of Contents

1. Introduction

Have you ever wonder what happen when you update document in Elasticsearch? Here I am going to explain you with working example what is going to happen underneath.

2. Start ELK cluster

I have prepared for you ready to use project. Simply unpack it and run script start_docker_compose_cluster.sh. If you need more details you can check full article about this project and how to start secure Elasticsearch and Kibana together.

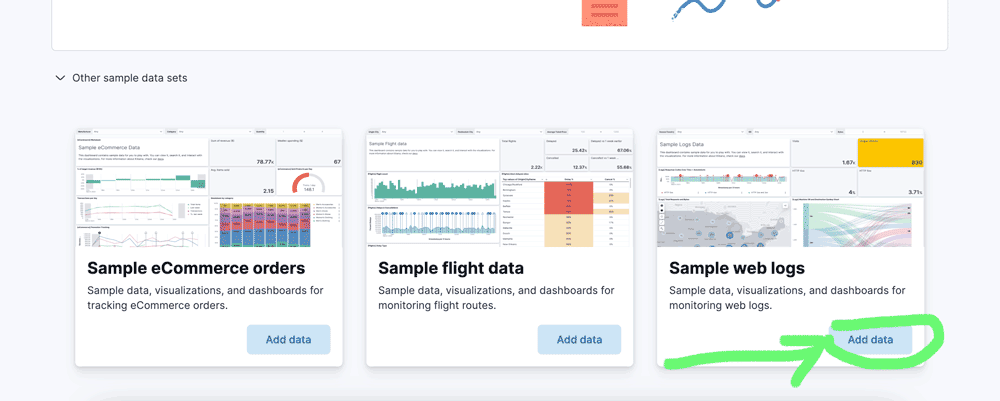

3. Enable Sample Dataset

Insert sample dataset in kibana called kibana_sample_data_logs. You can see it in welcome scree under section “Get started by adding integrations” , button “Try sample data”, on the new page unroll “Other sample data sets” then add “Sample web logs”

After that you can see new data stream added.

4. Perform update

4.1. Number of deletions before

You can check in dev tools how many indices were deleted.

GET _cat/indices/*kibana_sample_data_logs*?v&h=index,docs.count,docs.deleted,store.size

above should return

index docs.count docs.deleted store.size

.ds-kibana_sample_data_logs-2025.09.04-000001 14074 0 8.4mb

Nothing was deleted. So time to update index

4.2. Perform update

Run below in console

response should be

POST kibana_sample_data_logs/_update_by_query

{

"query": {

"range": {

"timestamp": {

"gte": "now",

"lte": "now+30m"

}

}

},

"script": {

"source": "ctx._source['bytes'] *= 999; "

}

}

{

"took": 6,

"timed_out": false,

"total": 8,

"updated": 8,

"deleted": 0,

"batches": 1,

"version_conflicts": 0,

"noops": 0,

"retries": {

"bulk": 0,

"search": 0

},

"throttled_millis": 0,

"requests_per_second": -1,

"throttled_until_millis": 0,

"failures": []

}

You can see that 6 documents were updated. But know when you check indices list with _cat endpoint you can noticed also 6 documents were deleted at the same time.

GET _cat/indices/*kibana_sample_data_logs*?v&h=index,docs.count,docs.deleted,store.size

results:

index docs.count docs.deleted store.size

.ds-kibana_sample_data_logs-2025.09.04-000001 14074 8 8.4mb

If you run same _update_by_query multiple times, it will even increase more.

5. Why Update Deletes?

You may thinking now why updating documents creating deleted documents. This is because documents are immutable and you can’t actually update them in place. What happen in background Elasticsearch creating copy of selected (by query) documents with given changes applied, then saving them under same index.

Physical removal is costly operation and it would slow down ingestion process, therefore _update_by_query actually marks old docs as deleted, and inserts new versions. Finally you can see it under _cat API in column docs.deleted.

5.1. Look how document being changed

You can verify this behavior by selecting document via _id field. First get some sample docs

GET .ds-kibana_sample_data_logs-2025.09.04-000001/_search

You can see here what fields are inside documents and note down _id of one document.

"hits": [

{

"_index": ".ds-kibana_sample_data_logs-2025.09.04-000001",

"_id": "kel2FJkB_UCnX8C1b1wU",

"_score": 1,

You can search matching right id

GET .ds-kibana_sample_data_logs-2025.09.04-000001/_search

{

"query": {

"term": {

"_id": {

"value": "kel2FJkB_UCnX8C1b1wU"

}

}

}

}

after successful run modify update by query API call using same query.

POST kibana_sample_data_logs/_update_by_query

{

"query": {

"term": {

"_id": {

"value": "kel2FJkB_UCnX8C1b1wU"

}

}

},

"script": {

"source": "ctx._source['bytes'] *= 999; "

}

}

{

"took": 8,

"timed_out": false,

"total": 1,

"updated": 1,

"deleted": 0,

"batches": 1,

"version_conflicts": 0,

"noops": 0,

"retries": {

"bulk": 0,

"search": 0

},

"throttled_millis": 0,

"requests_per_second": -1,

"throttled_until_millis": 0,

"failures": []

}

Then you can check same document by _id and verify that version is higher.

GET .ds-kibana_sample_data_logs-2025.09.04-000001/_search?version=true

{

"query": {

"term": {

"_id": {

"value": "kel2FJkB_UCnX8C1b1wU"

}

}

}

}

response:

"hits": [

{

"_index": ".ds-kibana_sample_data_logs-2025.09.04-000001",

"_id": "kel2FJkB_UCnX8C1b1wU",

"_version": 2,

"_score": 1,

5.2. Segment merges

From time to time Elasticsearch will merge segments. Segments are components of Apache Lucene Index, which is equivalent of Elasticsearch shard. As consequence count of deleted documents is decreasing every time merge operation happens. You can observe that via _cat API

GET _cat/indices/*kibana_sample_data_logs*?v&h=index,docs.count,docs.deleted,store.size

You can still calculate how many docs were updated sum of _version field value minus one.

GET kibana_sample_data_logs/_search

{

"runtime_mappings": {

"my_version": {

"type": "long",

"script": {

"source": "emit(doc['_version'].value-1)"

}

}

},

"aggs": {

"version_sum": {

"sum": {

"field": "my_version"

}

}

},

"size": 0

}

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 10000,

"relation": "gte"

},

"max_score": null,

"hits": []

},

"aggregations": {

"version_sum": {

"value": 49

}

}

}

If you do not substract 1 from version value then your result will be equal to total documents in index plus all these marked as deleted.

"source": "emit(doc['_version'].value)"

{

"took": 0,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 10000,

"relation": "gte"

},

"max_score": null,

"hits": []

},

"aggregations": {

"version_sum": {

"value": 14123

}

}

}

Looking at current docs.deleted

index docs.count docs.deleted store.size

.ds-kibana_sample_data_logs-2025.09.04-000001 14074 15 8.4mb

You can calculate how many updated documents already were part of the clean up(merge).

Already_merged=14123-14074-15=34

5.3. Force merge

There is a way to force Elasticsearch to merge segments.

POST /kibana_sample_data_logs/_forcemerge?only_expunge_deletes=true

response

{

"_shards": {

"total": 1,

"successful": 1,

"failed": 0

}

}

If you check indices again you can see zero documents marked for deletion. All gaps are filled now.

index docs.count docs.deleted store.size

.ds-kibana_sample_data_logs-2025.09.04-000001 14074 0 8.4mb

And all original documents that were updated got deleted.

Already_merged=14123-14074-0=49

6. Final thoughts

In this knowledge article you have learned how Elasticsearch handle updated documents and why marking them as deleted. You also practice queries execution on working cluster.

Have a nice coding!