Table of Contents

0. Introduction

This is continuation of article created before

Elasticsearch backup & restore to S3 SIA using docker swarm with secrets

This time I am presenting IPFS storage instead of SIA. Honestly speaking I don’t see anyone will store confidential data on IPFS even if encrypted. This can be good for testing purposes when data do not need to be protected. I can imagine twitter data if someone want to write an application to index twitter data into Elasticsearch or even better Neo4j.

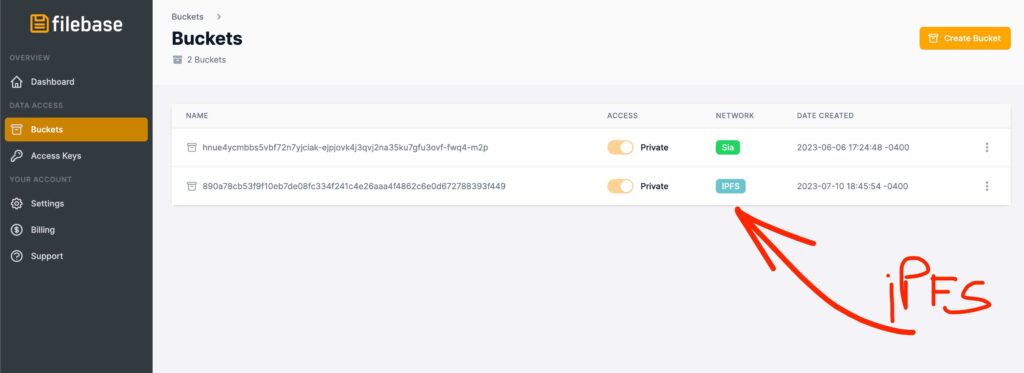

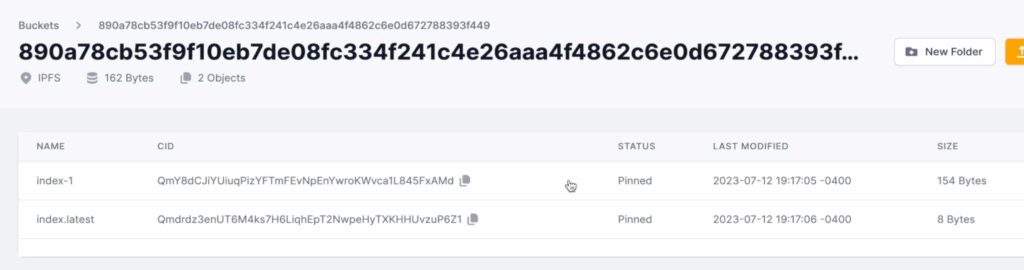

1. Create IPFS “Private” Bucket

Actually IPFS bucket is not so private as everyone can access it using for example Brave web browser with link https://dweb.link/ipfs/<CID> where <CID> you replace with actual address CID . List of public gateways you can check here

It is called as private in Filebase because with free plan user cannot use their gateway to access it as web2 content. Elasticsearch backup files are not encrypted so anyone who has access to that repository data can restore it to his own cluster – simple. Creating bucket itself is same process as SIA bucket. Only IPFS label appears afterwards.

As name I have used first 63 characters of sha-256 sum of the word “s3”.

echo s3 | shasum -a 256 | head -c 63

2. Connect to storage and validate

Different bucket name – that’s it.

GET https://890a78cb53f9f10eb7de08fc334f241c4e26aaa4f4862c6e0d672788393f449.s3.filebase.com

<?xml version="1.0" encoding="UTF-8"?>

<ListBucketResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Name>890a78cb53f9f10eb7de08fc334f241c4e26aaa4f4862c6e0d672788393f449</Name>

<Prefix/>

<Marker/>

<MaxKeys>1000</MaxKeys>

<IsTruncated>false</IsTruncated>

</ListBucketResult>

3. Start docker Elasticsearch container

Procedure is exactly same as it was with SIA.

docker swarm init;

# type access key and secret key separated by new line after docker secret command like below

# C13DEC58EA12B338D334

# BNjBdwGx35HApkaxaye62atGZJlFbfya0nPztAlj

docker secret create filebase_accesskey_secretkey -;

docker service create \

--name elastic \

--publish published=9200,target=9200 \

--mount type=volume,src=elkdata,dst=/usr/share/elasticsearch/data \

--mount type=volume,src=elkconf,dst=/usr/share/elasticsearch/config \

--secret filebase_accesskey_secretkey \

docker.elastic.co/elasticsearch/elasticsearch:8.8.1;

# login to execute commands

dockerContainerID=`docker ps -l --format "{{.ID}}"`;

docker exec -it $dockerContainerID sh;

sh-5.0$ elasticsearch-reset-password -u elastic -i --url https://localhost:9200

sh-5.0$ cat /run/secrets/filebase_accesskey_secretkey | elasticsearch-keystore add --stdin s3.client.filebase.access_key s3.client.filebase.secret_key

sh-5.0$ elasticsearch-keystore passwd

Because I mounted ‘/usr/share/elasticsearch/config’ into volume and there is elasticsearch.keystore file I run ‘elasticsearch-keystore passwd’ command to setup password for this storage and keep it encrypted instead just obfuscated.

sh-5.0$ ls -lt /usr/share/elasticsearch/config

total 68

-rw-rw-r-- 1 elasticsearch root 1106 Jul 11 21:45 elasticsearch.yml

-rw-rw---- 1 elasticsearch root 439 Jul 11 21:45 elasticsearch.keystore

drwxr-x--- 2 elasticsearch root 4096 Jul 11 21:45 certs

-rw-rw-r-- 1 root root 12549 Jun 5 21:37 log4j2.properties

-rw-rw-r-- 1 root root 17969 Jun 5 21:34 log4j2.file.properties

-rw-rw-r-- 1 root root 473 Jun 5 21:34 role_mapping.yml

-rw-rw-r-- 1 root root 197 Jun 5 21:34 roles.yml

-rw-rw-r-- 1 root root 0 Jun 5 21:34 users

-rw-rw-r-- 1 root root 0 Jun 5 21:34 users_roles

drwxrwxr-x 2 elasticsearch root 4096 Jun 5 21:33 jvm.options.d

-rw-rw-r-- 1 root root 1042 Jun 5 21:31 elasticsearch-plugins.example.yml

-rw-rw-r-- 1 root root 2569 Jun 5 21:31 jvm.options

sh-5.0$ exit;

Elasticsearch is running. Because keystore file get modified reload of secure settings is needed. Settings we added are hot and can be reloaded using API call. Use Postman or curl to do it.

docker cp $dockerContainerID:/usr/share/elasticsearch/config/certs/http_ca.crt .

curl --cacert http_ca.crt -XPOST -u elastic 'https://localhost:9200/_nodes/reload_secure_settings' \

--header 'Content-Type: application/json'-d'

{

"secure_settings_password":"SomePasswordYouSetup"

}'

6. Register repository for backup

Now time to work on Elasticsearch. First register repository that will be used for backup on Elasticsearch. I used name ipfsrepo.

curl --cacert http_ca.crt -XPUT -u elastic 'https://localhost:9200/_snapshot/ipfsrepo?verify=true' \

--header 'Content-Type: application/json' -d'

{

"type":"s3",

"settings":{

"bucket":"890a78cb53f9f10eb7de08fc334f241c4e26aaa4f4862c6e0d672788393f449",

"client":"filebase",

"region":"us-east-1",

"endpoint":"s3.filebase.com"

}

}'

Now you can load your test data data.

7. Load sample data

I created simple 2 documents to load into index. Of course you can load more , exactly up to 5GB because this is free storage on IPFS network with free account on Filebase.

curl --cacert http_ca.crt -XPOST -u elastic 'https://localhost:9200/storage/_bulk' \

--header 'Content-Type: application/json' -d'

{"index":{"_id":"cloud"}}

{"type":"Centralized","owner":"Big Corporation","country":"USA"}

{"index":{"_id":"ipfs"}}

{"type":"Distributed","owner":"The People","country":"World"}

'

8. Create snapshot (backup)

Action from article title. Backup data to S3 IPFS storage is happening now.

Please execute

curl --cacert http_ca.crt -XPUT 'https://localhost:9200/_snapshot/ipfsrepo/snapshot_20230709?wait_for_completion=true' \

--header 'Content-Type: application/json' -d'

{

"indices":"*,-.*",

"include_global_state":"false"

}'

9. Delete data

Because I trust what I did I am going to delete all data I loaded. Please be with me

curl --cacert http_ca.crt -u elastic -XDELETE 'https://localhost:9200/storage'

To be sure if data is delete, search for it

curl --cacert http_ca.crt -u elastic -XGET 'https://localhost:9200/storage/_search'

10. Restore snapshot (backup)

Now restore to have it back.

curl --cacert http_ca.crt -u elastic -XPOST 'https://localhost:9200/_snapshot/ipfsrepo/snapshot_20230709/_restore' \

--header 'Content-Type: application/json' -d'

{

"indices":"*"

}'

Search again to confirm that data get restored.

curl --cacert http_ca.crt -u elastic -XGET 'https://localhost:9200/storage/_search'

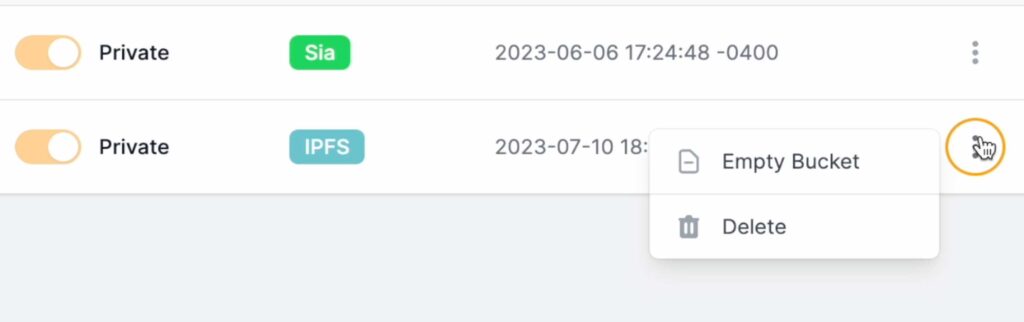

11. Clean up

Cleaning is optional but it helps keep things neat.

First delete Elasticsearch repository. This could clean up IPFS storage on Filebase but unfortunately leaving few files.

curl --cacert http_ca.crt -u elastic -XDELETE 'https://localhost:9200/_snapshot/my_s3_filebase_repository/snapshot_20230709/'

What to do? Just clean them manually using Filebase UI.

With docker there are 2 actions – closing swarm and deleting created volumes. If you plan to start another cluster with same volumes then it might cause issues as it will mix with previous cluster metadata so let’s keep it clean.

docker swarm leave --force

# remove volumes

docker volume rm elkconf

docker volume rm elkdata

12. Summary

In the tutorial you have learned how to create Filebase IPFS bucket and connect to that bucket using Postman. Later on you started 1 node Elasticsearch cluster on 1 node swarm cluster and you utilize docker secrets for S3 credentials. With Elasticsearch you created repository that pointed to IPFS “private” (only from Filebase gateway perspective) unencrypted bucket. Then you loaded sample data to Elasticsearch which you secure backing them up on decentralized storage. Once data was back up you deleted that data on Elasticearch, once done – you restore it. Finally clean up was done.

I hope you enjoy it like me. I will continue with other tutorials like that so you can subscribe to my YouTube channel and bookmark this blog.

Have a nice coding 🙂